I would have thought that dragging in data from SQL Server into Excel (both Microsoft products) would be easy - and it is if you're looking to drag in entire tables, views, etc. But if you want to do something a little more complicated with parameters it becomes a lot harder and less intuitive to get it to work.

The example below shows how to get the ExecutionLogs from a SQL Server instance between two dates.

I'm going to use Excel 2013 as it's the latest version and the one I have to hand.

Create a blank workbook by selecting "Blank workbook" (which is usually the first option in the middle of the screen);

|

| Excel 2013: New "Blank workbook" Tile |

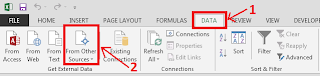

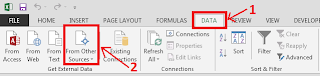

Select the "Data" page in the ribbon and then click on "From Other Sources" in the "Get External Data" part of the ribbon (on the left). Select "From Microsoft Query" (which should be the very bottom option);

|

| Excel 2013: Data Page |

NOTE: you may think selecting "SQL Server" is a slightly more obvious choice. However this will not allow you to use parametrized SQL - it's just for direct export from tables or views (why that's the case if beyond me!).

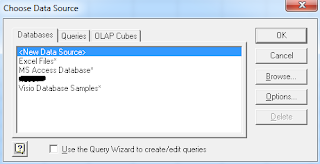

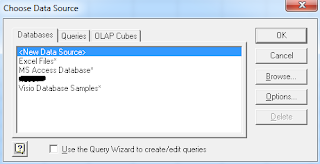

This will then open the "Choose Data Source" dialog;

|

| Excel 2013: Choose Data Source Dialog |

This dialog clearly dates from an earlier version of Windows and it's difficult to see how Microsoft couldn't have "updated" this with the rest of the 2013 look-and-feel. I'm running Windows 7 but I have have a sneaking suspicion that everyone from Windows XP onwards will be familiar with this dialog (although possibly not with the addition of "OLAP Cubes").

This dialog also isn't part of Excel, it's a separate application. Sure Microsoft will score some marks for re-use of a standard Windows component but the change in interface is jarring to say the least ... and it gets worse.

Leave "New Data Source

" highlighted and click "OK";

|

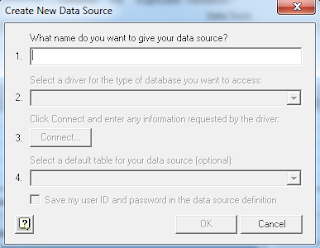

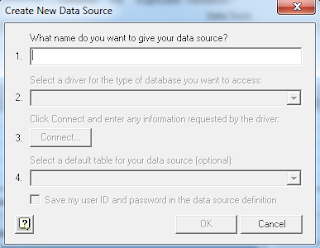

| Excel 2013: Create New Data Source Dialog |

We seem to have slipped back to a pre-wizard era and we now have fields labelled 1 to 4. When we complete field 1, field 2 becomes available, on completing field 2 field 3 becomes available. This is jarring different from the other dialogs within Excel 2013.

Anyway populate fields and 2 in the dialog, selecting "SQL Server" from the drop down (in mine it was at the very bottom). Then click "Connect ...";

|

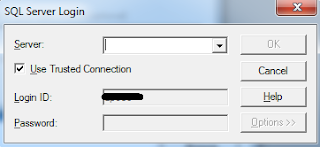

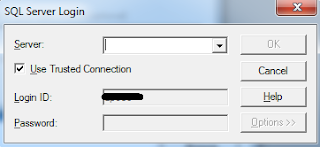

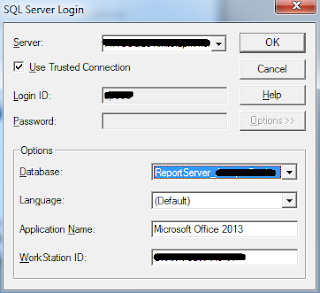

| Excel 2013: SQL Server Login |

Enter the login information - "Use Trusted Connection" means use your already authenticated (Active Directory) credentials - once you've entered a Server the "Options" box at the bottom right will become available, click on it;

|

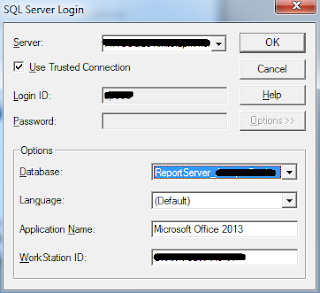

| Excel 2013: SQL Server Login Dialog - Extended |

Use the "Database" drop down to select the database you wish to connect to. If you leave it as default it will pick the default database for your database user.

Click "OK".

Click "OK" again (on the "Create Data Source" dialog) - do not pick a table in the bottom drop down, we're going to use SQL with parameters.

The data source you just created should be select (in the "Choose Data Source" dialog) so just click "OK".

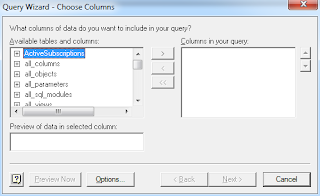

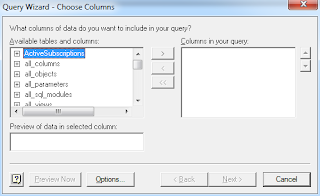

You will then be presented with the "Query Wizard - Choose Columns" dialog;

|

| Excel 2013: Query Wizard - Choose Columns |

Now you'll notice that you can't do anything from this stage *except* select a table.

Click "Cancel" (at the bottom right);

|

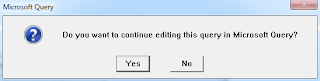

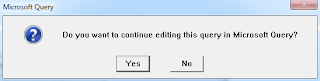

| Excel 2013: Microsoft Query Confirmation Dialog |

Click "Yes";

|

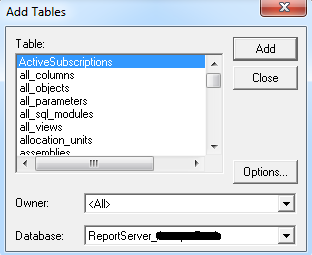

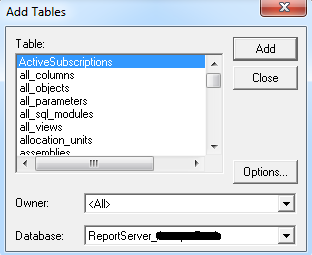

| Excel 2013: Add Tables Dialog |

We're not working with tables so click "Close";

|

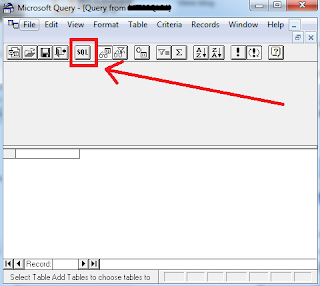

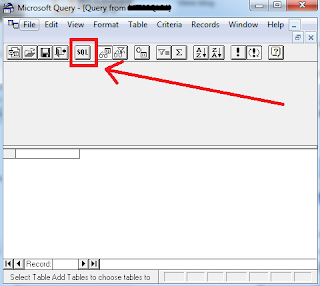

| Excel 2013: Microsoft Query |

Click on the "SQL" button on the menu bar;

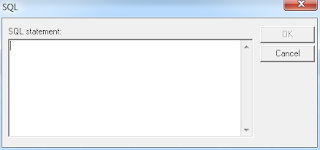

|

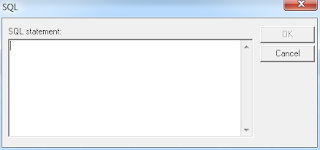

| Excel 2013: Microsoft Query - SQL Dialog |

Here is the SQL we are going to use;

SELECT

EL.InstanceName,

EL.ItemPath,

EL.UserName,

EL.ExecutionId,

EL.RequestType,

EL.Format,

EL.Parameters,

EL.ItemAction,

EL.TimeStart,

EL.TimeEnd,

EL.TimeDataRetrieval,

EL.TimeProcessing,

EL.TimeRendering,

EL.Source,

EL.Status,

EL.ByteCount, EL.AdditionalInfo

FROM ExecutionLog3 EL

WHERE EL.TimeStart >= ["Min Start Date"]

AND EL.TimeStart < ["Max Start Date"]

ORDER BY EL.TimeStart DESC

Enter the SQL and click "OK".

NOTE: There are a couple of "gotchas" here. The SQL is processed prior to being run and it isn't particularly flexible. If you use ANSI SQL (JOIN ... ON ...) then you won't get the graphical interface at best, or it just won't work. Equally including square-backets [] seems to break the SQL, as does having "dbo." in front of the table name.

"Broken" SQL is usually identified by you being told that the SQL doesn't support the graphical interface. This is usually a prelude to a more obscure error.

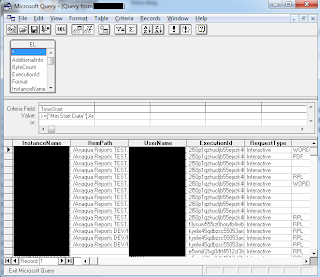

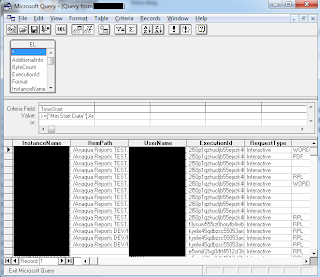

Providing everything is working OK you'll see;

|

| Microsoft Query: Sample Data |

Click on the "Exit" button (fourth from the left at the top left).

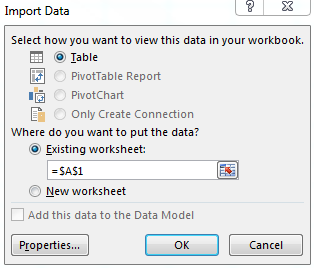

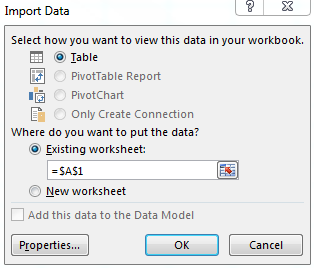

This closes Microsoft Query and returns control to Excel. The "Import Data" dialog will now appear;

|

| Excel 2013: Import Data Dialog |

Change "=$A$1" to "=$A$4" (so we have a few lines for the parameter entry boxes) and click "OK";

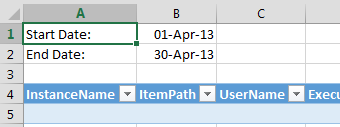

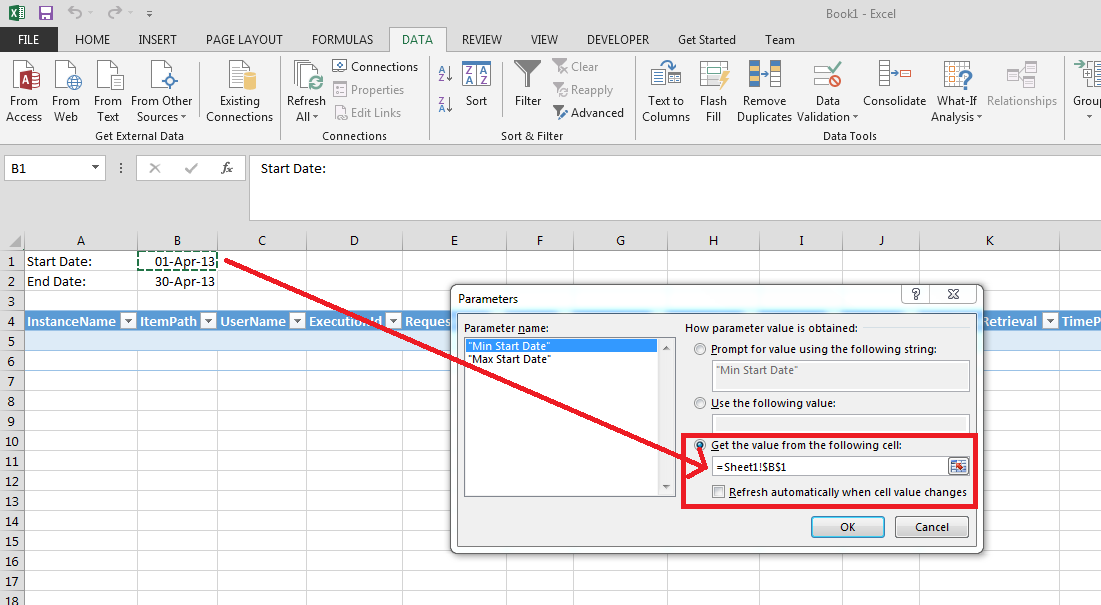

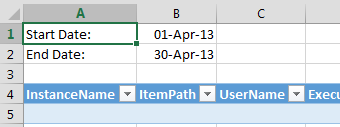

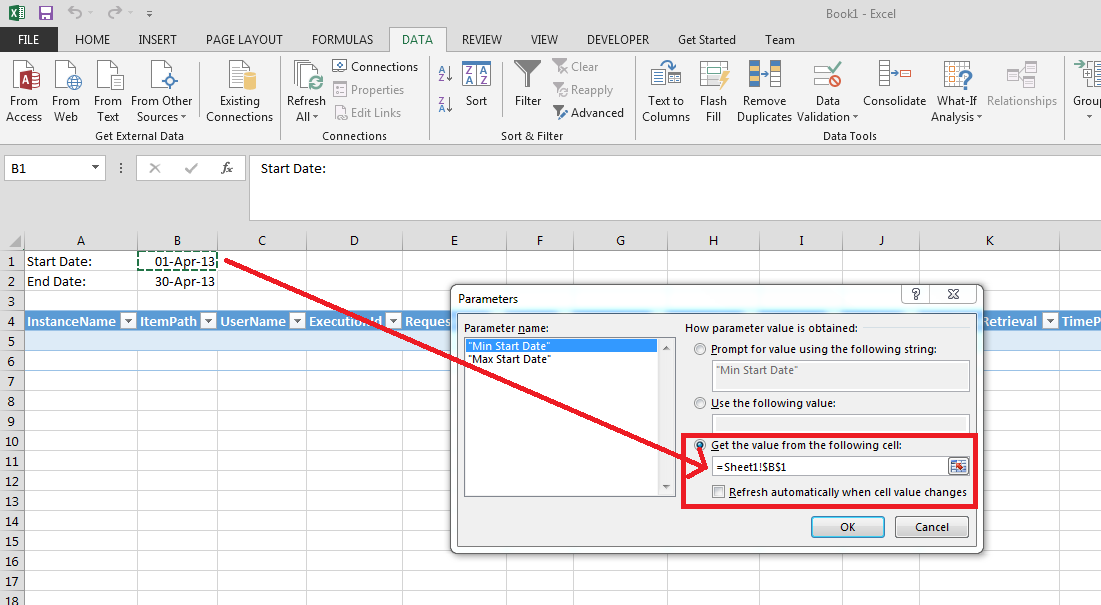

Enter "Start Date" in A1, and "End Date" into A2 (just labels). And then two dates into B1 and B2 (these will be the from/to dates we run the report as);

|

| Excel 2013: Parameter Values in Excel |

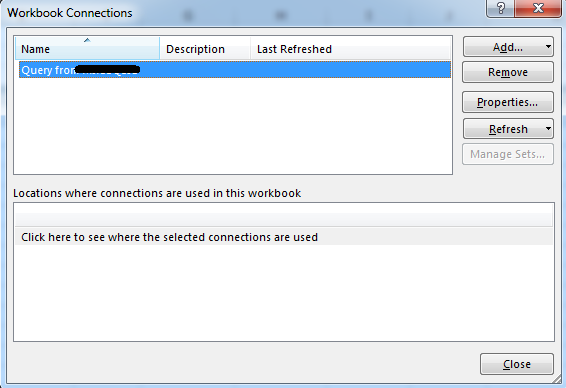

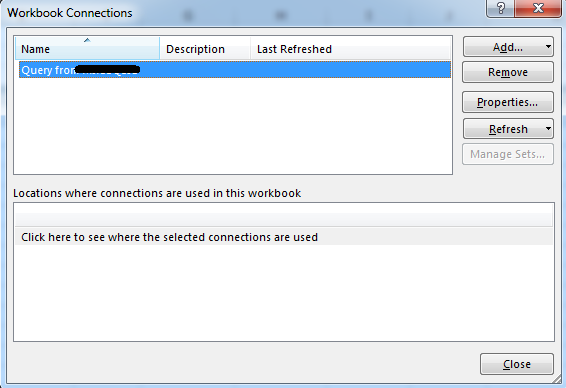

Now we need to link up the cells we've used with the parameters in use in our query. Click on the "Data" tab in the ribbon and then "Connections";

|

| Excel 2013: Connections |

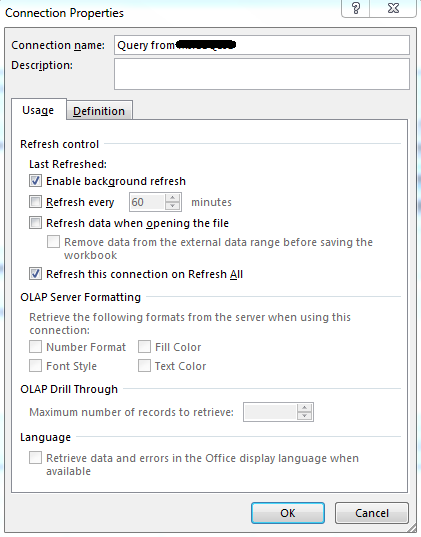

Select the connection and then click "Properties";

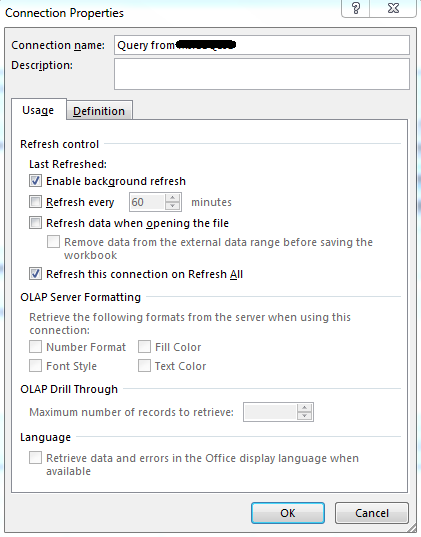

|

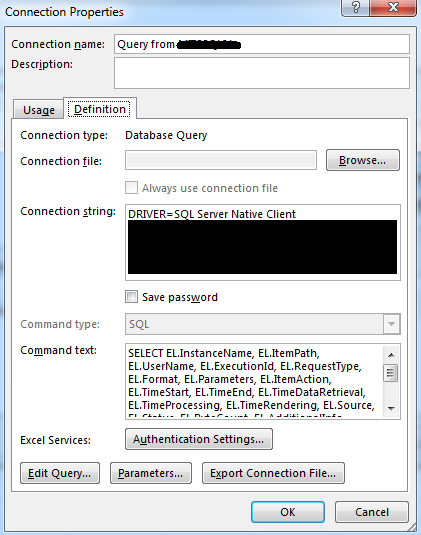

| Excel 2013: Connection Properties |

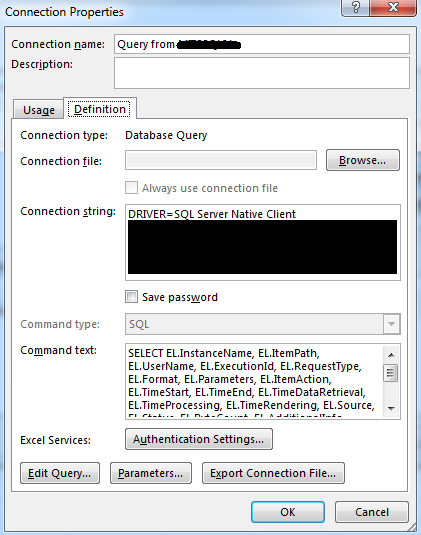

Click on the "Definition" tab;

|

| Excel 2013: Connection Properties - Definition Tab |

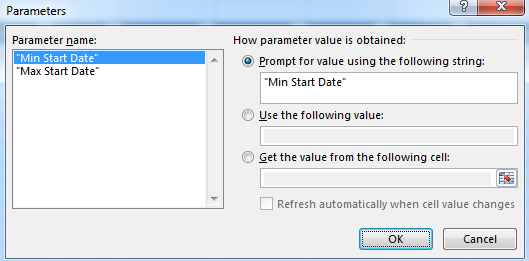

Click on the "Parameters" button at the bottom (if you have used the SQL Server option in Excel this is where you'd have the problem - "Parameters" would be permanently greyed out);

|

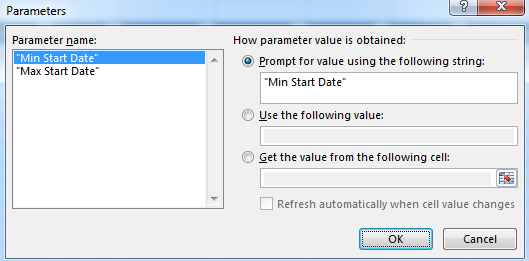

| Excel 2013: Parameters |

As you can see in the list there are two parameters, the two we created earlier in the SQL. Both are currently set to prompt us for values. Click on the "Get the value from the following cell" radio group and select the cell we have entered the Start Date in;

|

| Excel 2013: Default Parameter Value |

You can also check the "refresh automatically when cell value changes" box if you want to work that way.

Repeat the process with the Max Start Date Parameter.

Click "OK" (closed Parameters dialog)

Click "OK" (closes Connection Properties dialog)

Click "Close" (closes Workbook Connections dialog)

Click "Refresh all" (in the ribbon)

And we're done! If this was useful for you don't forget to leave a comment ...